Don't Overengineer

It's Saturday. I just woke up. And I mean just woke up. You know those weird, fever-dream-like states you're in right before your alarm?

I was dreaming about code.

Yeah, it’s weird. I was trapped in this endless meeting room, and every time the app was slow, a project manager would slam a new, giant textbook on the table. "We need CQRS!" one shouted. "Rewrite it in Rust!" another yelled. "I've ordered a 12-node Kubernetes cluster!" a third one announced, while the app was just glitching in the corner, probably choking on a simple N+1 query.

I woke up in a cold sweat with a single, clear thought: We are all insane.

We love complexity. We love the new hotness. We love the idea of building a system so robust it could survive a nuclear blast, even when we’re just making a to-do list app. Our first instinct when we see a problem isn't to find the simplest solution, it's to find the most impressive one.

It’s like our app has a small leak, and we decide the best solution is to move to a new continent.

As I was lying there, staring at the ceiling, my dream-addled brain coughed up this... this list. It’s not a perfect, academic list. It’s the "Ladder of Sanity." It’s the order of operations we should follow when things get slow, from "duh, check this first" to "okay, call your family, you're not coming home for a month."

So, I grabbed my phone and typed it out. And now, for your benefit (and my therapy), here is my half-asleep manifesto on not overengineering your life away.

The Ladder of Sanity: How to Fix Problems Without Losing Your Mind

This is the order you should investigate performance issues. Stop and fix the problem at the lowest rung you can. DO NOT skip steps.

Step 0: The Foundation (You Already Messed Up, But It's OK)

This is stuff you should have done from the start.

- Good DB Design: Is your schema normalized? Are you using the right data types? You'd be surprised how many "slow query" problems are actually "bad schema" problems.

- Clean Code & Sane Patterns: Are you doing wildly inefficient things in your code? Are you fetching 10,000 items from the DB just to show 10 on the page? DRY (Don't Repeat Yourself) is great, but sometimes a little repetition is better than a "smart" abstraction that's 100x slower. Start with KISS (Keep It Simple, Stupid) and YAGNI (You Ain't Gonna Need It).

Let's make this concrete (with a Node.js/Postgres stack):

A common mistake in PostgreSQL is storing things like prices as VARCHAR or TEXT. Now you can't do SUM() calculations, and you have to CAST it in every query, which is slow and prevents index use. The right way is to use NUMERIC(10, 2) for money and TIMESTAMP WITH TIME ZONE (timestamptz) for all timestamps, because you're not a monster who enjoys time-zone bugs.

In Node.js, the classic sin is using fs.readFileSync() or any other ...Sync function inside an Express.js request handler. This blocks the event loop. While that one user is waiting for their file to be read, your entire server freezes. The fix is to always use the async versions, like await fs.promises.readFile(). The event loop "parks" that request, serves 1,000 other users, and comes back when the file is ready.

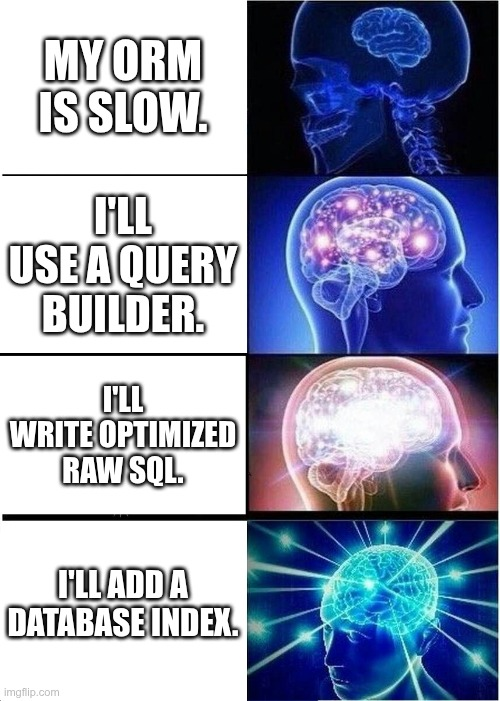

Step 1: The "99% of Your Problems Are Here" Rung (Querying & Indexing)

Seriously. Just... just look here first.

- App Querying (The ORM vs. Query Builder vs. Raw SQL Holy War):

This is a big one. As developers, we have three main ways to talk to our database, and our choice has huge performance implications.My standard is pretty simple, and it keeps me sane:- The ORM (Object-Relational Mapper): (e.g., Prisma, Sequelize). This is the "magic wand." You write pretty, object-oriented code like

db.user.findFirst()and it gives you data.- Benefit: Incredibly fast for development and prototyping. It abstracts the database, so you could (in theory) swap from Postgres to MySQL. It handles a lot of security (like SQL injection) for you.

- Loss: It's "magic." It hides the SQL from you, and it can generate wildly inefficient queries. This is where the dreaded N+1 Query Problem comes from. You trust the magic, and it decides to run 101 separate queries instead of one good

JOIN.

- The Query Builder: (e.g., Knex.js). This is the happy middle-ground. You're still writing JavaScript, but you're building a SQL query. It looks like

db('users').select('*').where('id', 1).- Benefit: Far more control than an ORM. You can build complex dynamic queries (adding

ifstatements to chain on more.where()clauses). It's still database-agnostic and protects you from SQL injection. - Loss: It's more verbose than an ORM. You're thinking more about database tables and less about code objects.

- Benefit: Far more control than an ORM. You can build complex dynamic queries (adding

- The Raw Query: (e.g.,

db.query('SELECT * FROM users...')). This is you, the database, and nothing in between. You are writing pure, unadulterated SQL as a string.- Benefit: Maximum power and performance. You can use every database-specific feature, every complex

JOIN, every window function. If you can write it inpsql, you can run it here. - Loss: Maximum danger. You are 100% responsible for preventing SQL injection (by always using parameterized queries). It's not portable. It can be ugly to read and a nightmare to build dynamic queries ("string concatenation-hell").

- Benefit: Maximum power and performance. You can use every database-specific feature, every complex

- The ORM (Object-Relational Mapper): (e.g., Prisma, Sequelize). This is the "magic wand." You write pretty, object-oriented code like

- I use an ORM for simple, fast development on a starter app.

- I use a Query Builder (or the ORM's simple query functions) for all the "normal" CRUD work:

INSERT,UPDATE,DELETE, andSELECTs with one or twoWHEREclauses. - I use Raw Query only when I have a complex query that I've probably already optimized by hand in a SQL client (like a big reporting query with multiple

JOINs andGROUP BYs).

No matter which one you use, the problem from my dream still happens. Here's that N+1 nightmare in code, where you accidentally misuse your ORM:

// This is the N+1 nightmare:

// 1 query to get posts

const posts = await db.post.findMany({ take: 100 });

// 100 MORE queries to get authors

const postsWithAuthors = await Promise.all(

posts.map(async (post) => {

const author = await db.user.findUnique({ where: { id: post.authorId } });

return { ...post, author };

})

);

// Total: 101 database roundtrips. Ouch.

And here's how you fix it, just by telling the ORM what you actually want:

// The simple, correct way:

// With Prisma

const posts = await db.post.findMany({

take: 100,

include: { author: true }, // Creates an efficient JOIN

});

// Total: 1 (or 2) efficient queries.

DB Tuning & Indexing (PostgreSQL):

This is the magic bullet. Let's say your users table has 5 million rows. A SELECT * FROM users WHERE email = '[email protected]' query is taking 8 seconds.To figure out why, you run EXPLAIN ANALYZE SELECT * FROM users WHERE email = '[email protected]';. The output shows a Parallel Seq Scan (Sequential Scan). This means Postgres is literally reading the entire 5-million-row table to find that one email.The fix is literally one line of SQL:

CREATE INDEX idx_users_on_email ON users (email);

You run EXPLAIN ANALYZE again. Now it shows an Index Scan. The query takes 4 milliseconds. You are a hero.

Step 2: The "Free Speed" Rung (Caching)

Okay, your query is as fast as it can be, but it's still heavy and gets called a lot.

So, your homepage shows "Top 10 Articles," right? That query is heavy and runs on every single page load. Why? The fastest query is the one you never run.

This is where caching (like Redis) comes in. You just grab the data and store it in fast memory for 10 minutes.

// Caching in Node.js + Redis

const CACHE_KEY = "top-10-articles";

app.get("/", async (req, res) => {

// 1. Check cache first

let cachedData = await redis.get(CACHE_KEY);

if (cachedData) {

return res.json(JSON.parse(cachedData));

}

// 2. Not in cache? Go to the DB

const topArticles = await db.query(YOUR_HEAVY_QUERY);

// 3. Store in cache for next time. Expire after 10 minutes.

await redis.set(CACHE_KEY, JSON.stringify(topArticles), "EX", 600);

return res.json(topArticles);

});

Step 3: The "Okay, We're Getting Serious" Rung (DB Architecture)

You're a massive success. Your site is getting hammered with traffic, and your poor database server is sweating. Caching helps, but the write traffic is high, or the read traffic is just too diverse to cache everything.

The classic sign you need this is when your app is, say, 95% reads (viewing products) and 5% writes (buying products), and the sheer volume of reads is slowing down the writes.

This is when you set up a Postgres Read Replica. It's a read-only copy of your database. Then, in your Node.js code, you just need to be "replica-aware." You create two database connection pools:

// Node.js with two connection pools

const { Pool } = require('pg');

// Pool 1: Connects to the main (primary) database

const primaryPool = new Pool(PRIMARY_DB_CONFIG);

// Pool 2: Connects to the read-only (replica) database

const replicaPool = new Pool(REPLICA_DB_CONFIG);

// USAGE:

// For anything that changes data (writes):

app.post('/checkout', async (req, res) => {

await primaryPool.query('INSERT INTO orders (...)', [ ... ]);

});

// For anything that ONLY reads data:

app.get('/products', async (req, res) => {

const products = await replicaPool.query('SELECT * FROM products');

});

This splits your traffic. All the "shopping" hits the replicas, leaving the primary database free and fast to process new orders.

Step 4: The "Now We're Architects" Rung (Decoupling)

Your app is now a giant, complex monolith. One part (like "video processing") is slowing everything else down. When someone uploads a video, the whole site grinds to a halt.

This is a big one. Imagine a user signs up. Your Node.js API handler has to:

await db.createUser(...)(Fast)await sendWelcomeEmail(...)(Slow, 3rd party API)await generateProfilePDF(...)(Very slow, CPU-intensive)await syncToAnalytics(...)(Slow, 3rd party API)

The user is staring at a loading spinner for 15 seconds, and if the email API times out, the entire signup fails.

The sane way to do this is to only do the essential work (creating the user) and "defer" the rest using a message broker (like RabbitMQ or Kafka).

// In your Express signup route:

app.post("/signup", async (req, res) => {

// 1. Do the ONLY thing that matters right now.

const user = await db.createUser(req.body);

// 2. Publish an event. "Hey, someone signed up!"

// This is instant.

await messageBroker.publish("user.signed_up", { userId: user.id });

// 3. Respond to the user immediately.

res.status(201).json(user); // Signup is "done" in < 100ms

});

// In a SEPARATE Node.js process (a "worker"):

messageBroker.subscribe("user.signed_up", async (msg) => {

// This runs in the background, not blocking the user.

await sendWelcomeEmail(msg.userId);

await generateProfilePDF(msg.userId);

await syncToAnalytics(msg.userId);

});

Your main app is now fast and responsive again.

Step 5: The "Deep Magic" Rung (Runtime & Web Server)

You've done all of the above. You've indexed, you've cached, you've scaled. But you're still hitting a wall.

But what if your Node.js app is fast, but your server has 32 CPU cores and your node app.js process is only using one? That one core is at 100% CPU, while the other 31 are asleep.

For us Node folks, this has a very specific, easy fix. You run your app in Cluster Mode.

The easiest way to do this is with a process manager like PM2. Instead of just running node app.js, you run this one command:

pm2 start app.js -i max

The -i max flag tells PM2 to launch one Node.js worker process on every single CPU core. It automatically load-balances requests across them. You just went from using 1/32nd of your server to using all of it.

Step 6: The "Final Boss" Rung (The Nuke)

You are now running a global, planet-scale application. You have a team of 500 engineers. You have optimized everything, and you've discovered that the fundamental limitations of your chosen language are the problem.

This is it. The "rewrite it in Rust/Go/Elixir" moment. This is what everyone jokes about doing at Step 1, but it's the absolute, final, last-ditch resort. It is expensive, it is risky, and you should only ever consider it when you have empirical data proving that every other rung on this ladder has been climbed and optimized to its absolute limit.

And even then, you don't rewrite everything.

Let's say you're building a real-time analytics dashboard processing 1 million events per second and need to do heavy math on them. You finally admit that Node.js isn't the best tool for heavy, parallelized, number-crunching.

You write one new, tiny microservice in Go or Python (with NumPy) to handle just that math. Your main Node.js app still handles the web requests, user auth, and everything else, but it now calls this new, specialized service for the heavy lifting.

The Dream's Over

The dream is fading now, and the coffee is kicking in. The point is, engineering isn't about finding the most complex, impressive solution. It's about finding the simplest, most direct solution that solves the problem.

Don't use a sledgehammer to crack a nut. Especially if the "nut" in question is just a missing index and the "sledgehammer" is a six-month rewrite.

Now, if you'll excuse me, I'm going to go enjoy my weekend. And try not to dream about database replication.

Member discussion